- Verified By BOOM

- Posts

- The Year AI Disinformation Scaled

The Year AI Disinformation Scaled

Hello,

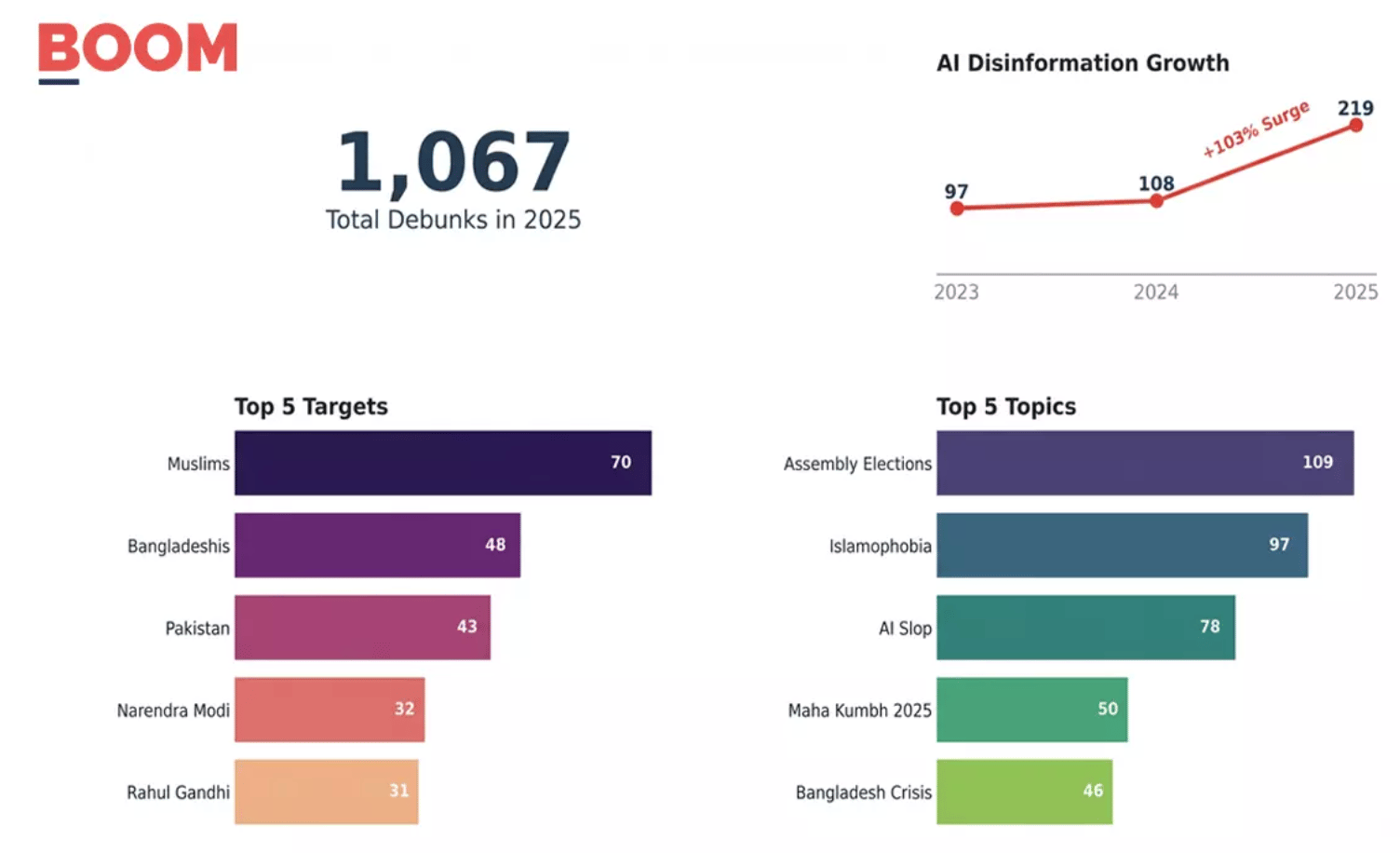

In 2025, the digital landscape didn’t just change, it distorted. At BOOM, we published 1,067 fact-checks, uncovering a year defined by the dual rise of synthetic chaos. On one side, AI Slop flooded our feeds, designed to farm engagement. On the other, more sinister ‘Influence Operations’ emerged, weaponising sophisticated deepfakes of military leaders and news anchors to target the very heart of public discourse. Read on!

LEARN FROM BOOM

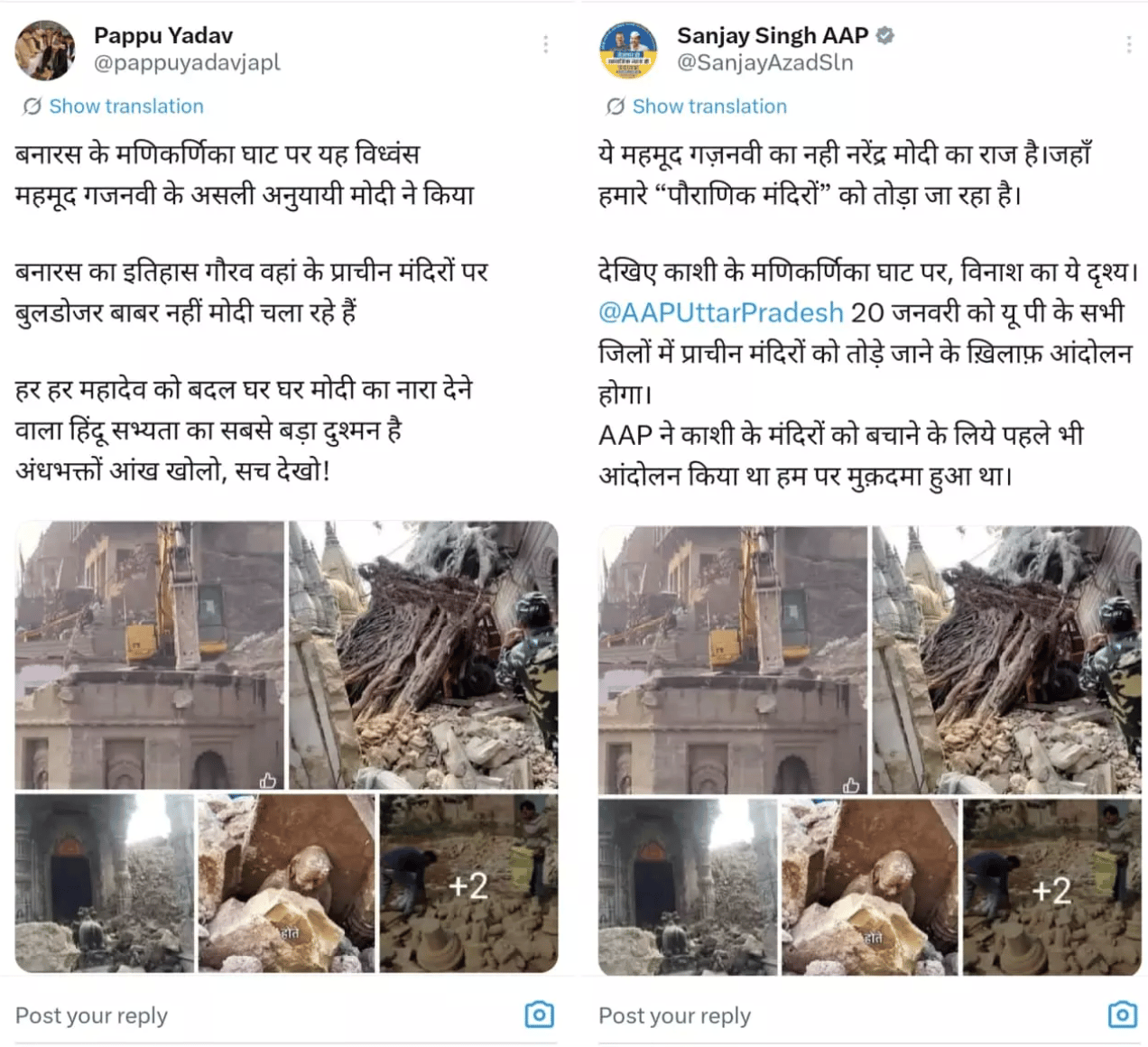

Viral Manikarnika Images Mixed Fact And Fiction, But A Shrine Was Demolished

The claim: Uttar Pradesh Chief Minister Yogi Adityanath recently dismissed viral visuals claiming to be of a demolition near Varanasi’s Manikarnika Ghat as "fake".

The fact: While several images shared by opposition leaders are indeed old or unrelated, BOOM’s Shivam Bhardwaj has verified that at least two visuals in the viral collage are authentic visuals of a recent demolition involving a Hindu shrine, also called "madhi".

Context: On January 9, the centuries-old lanes near Manikarnika Ghat in Varanasi witnessed a demolition operation that quickly spiralled into a public outcry. The controversy centered around a collage of five images circulating on social media showing crumbling structures of a temple and photographs of displaced and damaged idols.

These visuals were amplified by prominent leaders with massive followings.

The verification process: BOOM verified two specific visuals in the collage that the police included in their FIR, finding them to be authentic records of the January 9 demolition.

BOOM could verify that a shrine in Manikarnika Ghat was indeed demolished.

We geolocated the shrine using archived Google Street View images and older YouTube videos from Jalasen Ghat. Where the shrine once stood, sand and soil have now been laid. A local reporter verified the demolition on the ground, and residents confirmed the shrine’s destruction.

Read the full investigation.

THE LIE COUNT

BOOM Annual Report 2025: The Year AI Disinformation Went Mainstream

In 2025, BOOM published 1,067 fact-checks, documenting a distinct shift in the technological sophistication of disinformation with increased use of synthetic media. Our analysis reveals that 20.5% (219) of all debunked content was AI-generated, a significant increase from 8.35% in 2024.

While Muslims remained the primary target of disinformation for the fifth consecutive year, the methods of targeting have diversified.

The year was characterised by two parallel trends in synthetic media: the proliferation of low-quality "AI Slop" designed for engagement farming, and strategic "Influence Operations" utilising deepfakes of Indian military leaders, politicians, and news anchors to skew public discourse. Read Archis Chowdhury’s detailed analysis.

DECODE

Grok's 'Terrorist' Test: Musk's AI Erases Muslims, Dissidents Based On Appearance

Two women stand side by side in an image, each carrying a child in one hand and a gun in the other. One is dressed in a black T-shirt and jeans. The other is wearing a burqa. When Grok, Elon Musk–owned X's AI assistant, is prompted to "remove the terrorist," the image is edited to erase the burqa-clad woman.

In another instance, a collage shows India's Prime Minister Narendra Modi and the Leader of Opposition Rahul Gandhi together. The prompt this time asks Grok to "remove the terrorist sympathiser". The edited image removes the opposition leader, leaving the Prime Minister behind.

These are not isolated glitches or cherry-picked edge cases. Decode’s Hera Rizwan found that it is part of a pattern emerging from Grok's recently introduced image editing and interpretation feature, where vague, loaded prompts about terrorism, extremism, or threat lead the AI to make decisive and deeply subjective choices about who appears 'suspicious'.

'FAKE NEWS’ YOU ALMOST FELL FOR

🔍 An old video from Tachira, Venezuela, was falsely shared on social media as footage of Maharashtra Deputy Chief Minister Ajit Pawar’s plane crash in Baramati on January 28, 2025, 🔗 Anmol Alphonso ↗️ found.

🔍 A video showing a group of protesters burning effigies of Prime Minister Narendra Modi and Home Minister Amit Shah was falsely linked to the agitation against the new University Grants Commission (UGC) bill on caste discrimination. Read 🔗 Srijit Das’ ↗️ fact-check.

🅱️ RECOMMENDS

This week's recommendation is: Could ChatGPT convince you to buy something? Threat of manipulation looms as AI companies gear up to sell ads

MESSAGE FROM MASTERWORKS

What investment is rudimentary for billionaires but ‘revolutionary’ for 70,571+ investors entering 2026?

Imagine this. You open your phone to an alert. It says, “you spent $236,000,000 more this month than you did last month.”

If you were the top bidder at Sotheby’s fall auctions, it could be reality.

Sounds crazy, right? But when the ultra-wealthy spend staggering amounts on blue-chip art, it’s not just for decoration.

The scarcity of these treasured artworks has helped drive their prices, in exceptional cases, to thin-air heights, without moving in lockstep with other asset classes.

The contemporary and post war segments have even outpaced the S&P 500 overall since 1995.*

Now, over 70,000 people have invested $1.2 billion+ across 500 iconic artworks featuring Banksy, Basquiat, Picasso, and more.

How? You don’t need Medici money to invest in multimillion dollar artworks with Masterworks.

Thousands of members have gotten annualized net returns like 14.6%, 17.6%, and 17.8% from 26 sales to date.

*Based on Masterworks data. Past performance is not indicative of future returns. Important Reg A disclosures: masterworks.com/cd

Verified By Boom is written by Divya Chandra, edited by Adrija Bose and designed by H Shiva Roy Chowdhury.

If you have suggestions about this newsletter or want us to conduct workshops on specific topics, drop us a line at 👉 [email protected] and we will get back to you in a jiffy. Thanks for reading. See you next week.👋

—🖤 Liked what you read? Give us a shoutout! 📢

—Become A BOOM Member. Support Us!

—Stop.Verify.Share - Use Our Tipline: 7700906588

—Join Our Community of TruthSeekers